|

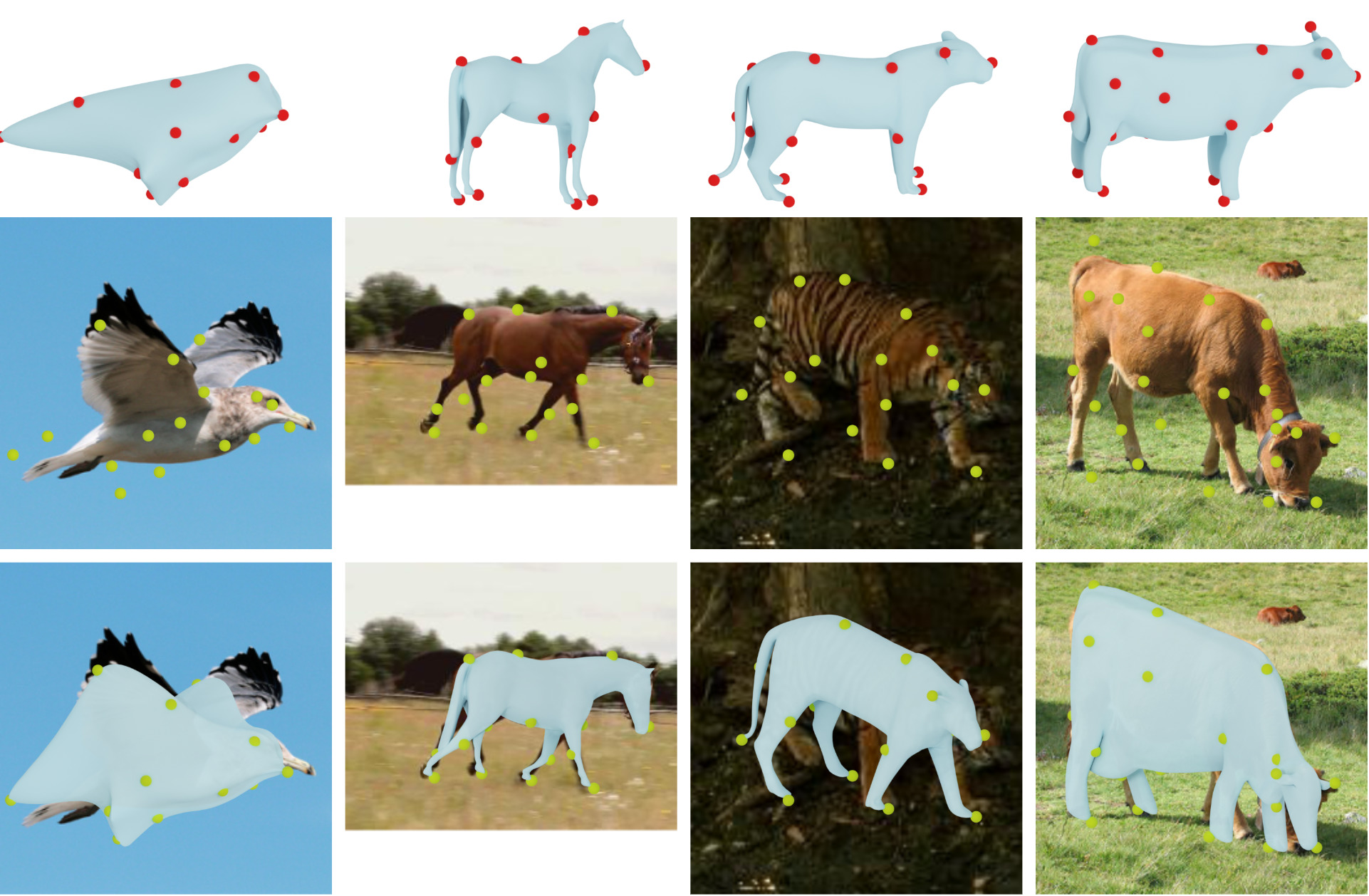

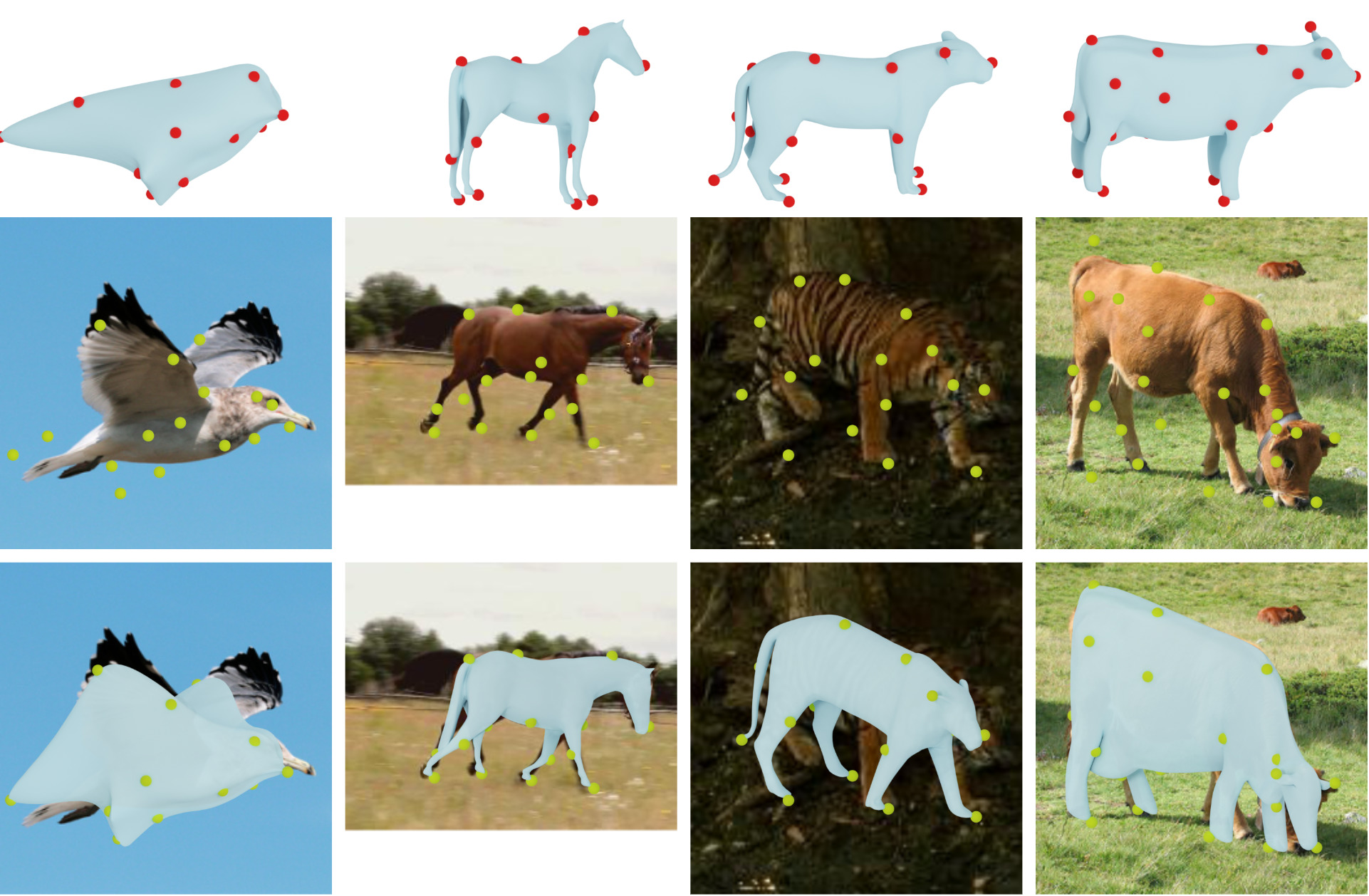

We tackle the problem of monocular 3D reconstruction for articulated object categories by guiding the deformation of a mesh template (top) through a sparse set of 3D control points regressed by a network given a single image (middle). Despite using only weak supervision in the form of keypoints, masks and video-based correspondence our approach is able to capture broad articulations, such as opening wings, motion of the lower limbs and neck (bottom).

|